In “Supporting the ‘lived expertise’ of older adults with type 1 diabetes: An applied focus group analysis to characterize barriers, facilitators, and strategies for self-management in a growing and understudied population” (Cristello Sarteau et al., 2024), the authors discuss their study among older adults (OAs, defined as adults 65 years of age or older) with type 1 diabetes concerning care management. This research consisted of nine in-person focus group discussions with a total of 33 OAs and caregivers.

In “Supporting the ‘lived expertise’ of older adults with type 1 diabetes: An applied focus group analysis to characterize barriers, facilitators, and strategies for self-management in a growing and understudied population” (Cristello Sarteau et al., 2024), the authors discuss their study among older adults (OAs, defined as adults 65 years of age or older) with type 1 diabetes concerning care management. This research consisted of nine in-person focus group discussions with a total of 33 OAs and caregivers.

Central to the design and implementation of this research was the Total Quality Framework (TQF) (Roller & Lavrakas, 2015). The authors selected the TQF due to their focus on rigor and a quality approach to investigate the lived experiences of OAs with type 1 diabetes.

To support rigorous research and reporting, we selected the Total Quality Framework (TQF), a comprehensive set of evidence-based criteria for limiting bias and promoting validity in all phases of the applied qualitative research process. (p. 2)

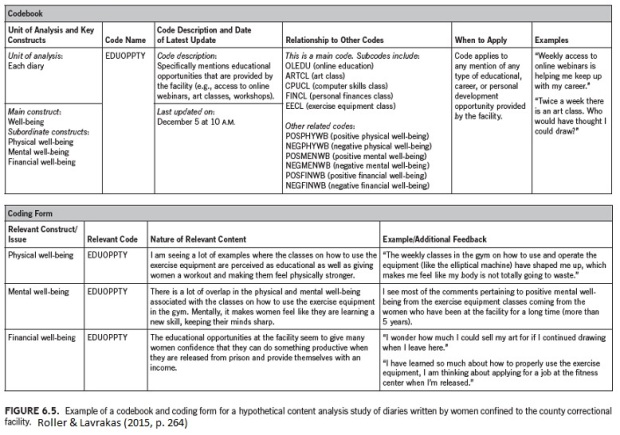

In this article, the authors provide a unique and useful table describing the rationale behind their methodological decisions pertaining to each component of the TQF, i.e., Credibility, Analyzability, Transparency, and Usefulness. For example, with respect to Credibility, the table offers a lengthy discussion of sample design, including the impact of limited resources on the recruitment process and why the size of each group discussion was kept to 4-5 participants. Other areas of discussion in the table include the coding format and identification of themes (Analyzability), complete disclosure of elements related to design, data collection, and analysis (Transparency), and “how the study should be interpreted, acted upon, or applied in other research context in the real world” (Usefulness). Importantly, readers are directed to areas within the article where they can read about the explanations of methodological decisions that go beyond the limited space of the table, e.g., definition of the target population.

This research “revealed, above all, the complex and dynamic nature of managing type 1 diabetes over the lifespan” and provided “valuable foundational information for future research efforts” (p. 13). In addition to the perceived strengths of the research, the authors’ quality approach also allowed for an informed discussion of the limitations (e.g., diversity in the sample). By way of the TQF Transparency component, the authors provide readers with the details they need to build on this research and move forward in defining care-management solutions for the OA population with type 1 diabetes. As the authors state, the TQF enabled them to “promote confidence in using results from our study to inform future decision-making” (p. 17).

Cristello Sarteau, A., Muthukkumar, R., Smith, C., Busby‐Whitehead, J., Lich, K.H., Pratley, R.E., Thambuluru, S., Weinstein, J., Weinstock, R.S., Young, L.A. and Kahkoska, A.R., 2024. Supporting the ‘lived expertise’of older adults with type 1 diabetes: An applied focus group analysis to characterize barriers, facilitators, and strategies for self‐management in a growing and understudied population. Diabetic Medicine, 41(1), e15156.